How can we mitigate risk within digital peer support communities?

September 07 2022

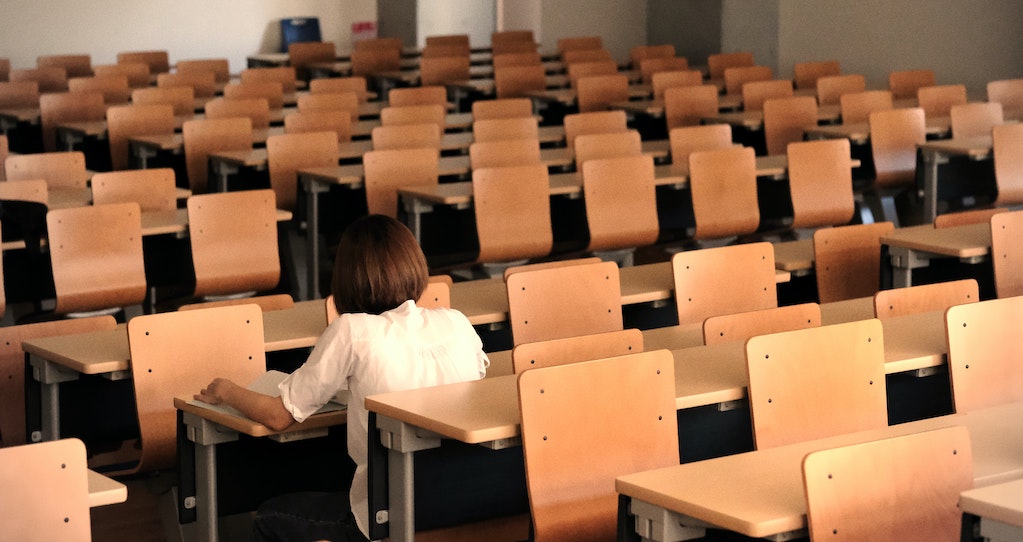

Offering digital peer support is an effective and accessible way for universities, colleges, and institutions to enhance traditional student mental health services. However, before engaging with these platforms, the associated risks and how to mitigate them must be well-understood.

The following perspective explores risks of digital communities and suggests considerations for risk management.

Understanding the benefits of digital peer support

First, for leadership wondering whether to offer digital peer support, it is important to establish why these services are valuable to address mental health needs at a population level, despite risks.

Among students struggling with more severe mental health challenges, participation in peer-to-peer communities is associated with feelings of greater social connectedness, group belonging, and increased effectiveness of strategies for day-to-day coping (J.A. Naslund et al 2016).

In a study on in-person peer groups by Batchelor et al (2019), 77.4% of surveyed UK students thought that peer support would be beneficial for their wellbeing; Over half agreed that peer support would offer fewer restrictions, greater insight and understanding (49.5%), be more accessible (43.9%), and give them greater choice than professional support.

The study also highlighted some of the key challenges presented by face-to-face peer support. When asked what would stop them accessing, most participants reported concerns around confidentiality (71.5%), trust (60.6%), and whether peers would be skilled enough to support others (48.9%). Moreover, participants were concerned about the emotional impact of offering support (31.6%) and stigma (36%).

Digital peer support communities present an opportunity for leaders to overcome these challenges while making services cost-effective and scalable when compared to one-to-one counselling models.

These are considerations to guide risk management of peer-to-peer digital platforms:

Anonymity

Many observers blame anonymity in social media as a contributor to inappropriate behaviour online. However, many social platforms, such as Facebook, ban anonymous accounts because of the perception that anonymous accounts are more likely to spread misinformation or harass/hurt other users. In addition, users of platforms that permit anonymity always run the risk of being doxed (having their personal information shared publicly) by other users. However, requiring people to share their identity on social media platforms can create an inhibiting effect for people who would be more likely to participate in online conversations if they could do so anonymously.

In the Togetherall community, for example, 64% of students said they were willing to share their thoughts and feelings because of the anonymous format of doing so. In other words, anonymity encourages candid participation, but only if safeguards are in place to ensure that anonymity doesn’t provide a shield behind which users can exhibit inappropriate behaviour. Three key safeguards include: a supportive community, moderation by licensed clinicians, and clear risk-management procedures for those at risk.

Supportive community

For online peer-to-peer experiences to be positive and beneficial for students, the community must be supportive and non-judgmental. Students must be able to trust that if they become vulnerable by sharing their feelings, the community will respond in a supportive manner.

In Togetherall’s community, qualified mental health professionals known as “Wall Guides” oversee activity and protect the community by ensuring that it stays positive and inclusive to all participants while also empowering members to discuss difficult subjects. Wall Guides also facilitate a compassionate intervention if a student seems overwhelmed or self-critical and can escalate those in need to a senior clinical team if necessary.

Clinical moderation

Studies show that unmonitored social media use can lead to depression, body image concerns, self-esteem issues, social anxiety, and other problems ). In response to criticism, Facebook-owned Instagram pledged to be more discriminating about the type of content shown to young people and to intervene if users dwell on specific problematic types of content (Instagram, 2021).

Most social platforms rely heavily on artificial intelligence (AI) to monitor content, but AI moderation is primitive and frustrating for users. AI moderation is a blunt-edged weapon that indiscriminately warns or suspends users by identifying problematic keywords, but it often does so incorrectly because the AI is often not sufficiently sophisticated to assess context and meaning. AI often flags benign content as problematic because it is unable to interpret complex mental health content. Similarly, because users routinely create content to avoid flagging systems, AI will often miss problematic content.

TikTok users often use numbers instead of letters to bypass algorithms. Because TikTok removes content that mentions the words “death,” “dying,” or “suicide,” users substitute numbers or asterisks to create words such as s*uc1de. In 2021, TikTok users began using the word “unalive,” with #unalive quickly climbing to 10 million views (Skinner, 2021).

In contrast to AI, human moderators, working with the support of technologies, can evaluate complex content and make professionally informed judgments about actions to take. Qualified clinicians are even better because they can draw on their education and professional experience to determine which situations to escalate, which to dismiss, and which need to be monitored over time.

Clinical moderators can also evaluate the tone and effect of each member’s participation to decide when a user is creating a problematic situation. The moderator can then take steps to remind the user of the community rules and, if violations continue, remove the user from participation. This results in a better and safer community experience.

Clear safeguarding policies

It is necessary to have clear policies in place about what moderators should do when members have concerns about something posted in the community and/or when participants seem to need additional support.

Most social media platforms have some safety guidelines in place, but these guidelines rely on AI and the judgment of the community or trained peers. Instagram, for instance, asks members to report other members who seem to be suicidal, and their escalation policies include showing the user a pop up with information about helplines and/or sending police for an automated wellness check.

In contrast, Togetherall provides 24/7 clinical moderation, with Wall Guides ensuring reliable coverage and safety measures if a situation requires escalated support. No matter the time of day or night, they evaluate whether a student participant is at risk of harming themselves or others and can then reach out to the member to coordinate higher levels of care – often working hand-in-hand with colleges and universities.

Togetherall’s first aim is to enable and empower the student at risk to be an active participant in their own safety and treatment. This may mean assisting the student to connect with local mental health providers or student resources. Wall Guides will also use their training and experience to make judgment calls about whether to escalate to emergency services.

Conclusion

Today’s students arrive at college or university as active participants in a range of digital peer communities that are known to be unmoderated, unhealthy for many, and without safeguarding mechanisms. Thus, the question is not whether students should use a digital peer community – they already are – but whether institutions will offer a healthy alternative that prioritises student mental health.

It is strategic to put risk management policies in place to keep students safe while they enjoy the benefits of participation in peer-to-peer communities to enhance wellbeing.

To learn more about how Togetherall provides students with a safe space to connect and share about their mental health, click here to book a demo and get an overview of our service.